The Multi-Armed Bandit Problem-Explained

Por um escritor misterioso

Descrição

Multi-Armed Bandit Problem, Bandit Multi-Armed Problem, Multi-armed, reinforcement learning, machine learning, data science

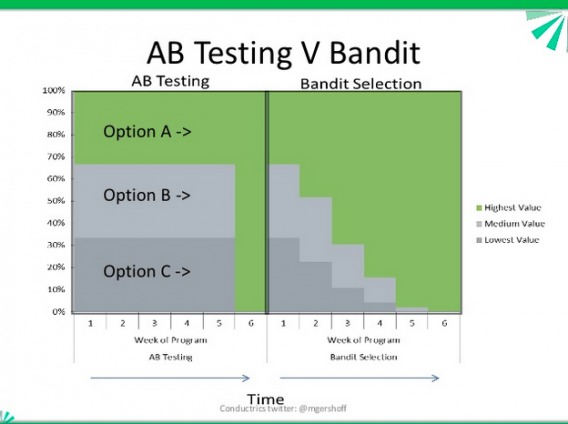

Guide to Multi-Armed Bandit: When to Do Bandit Tests

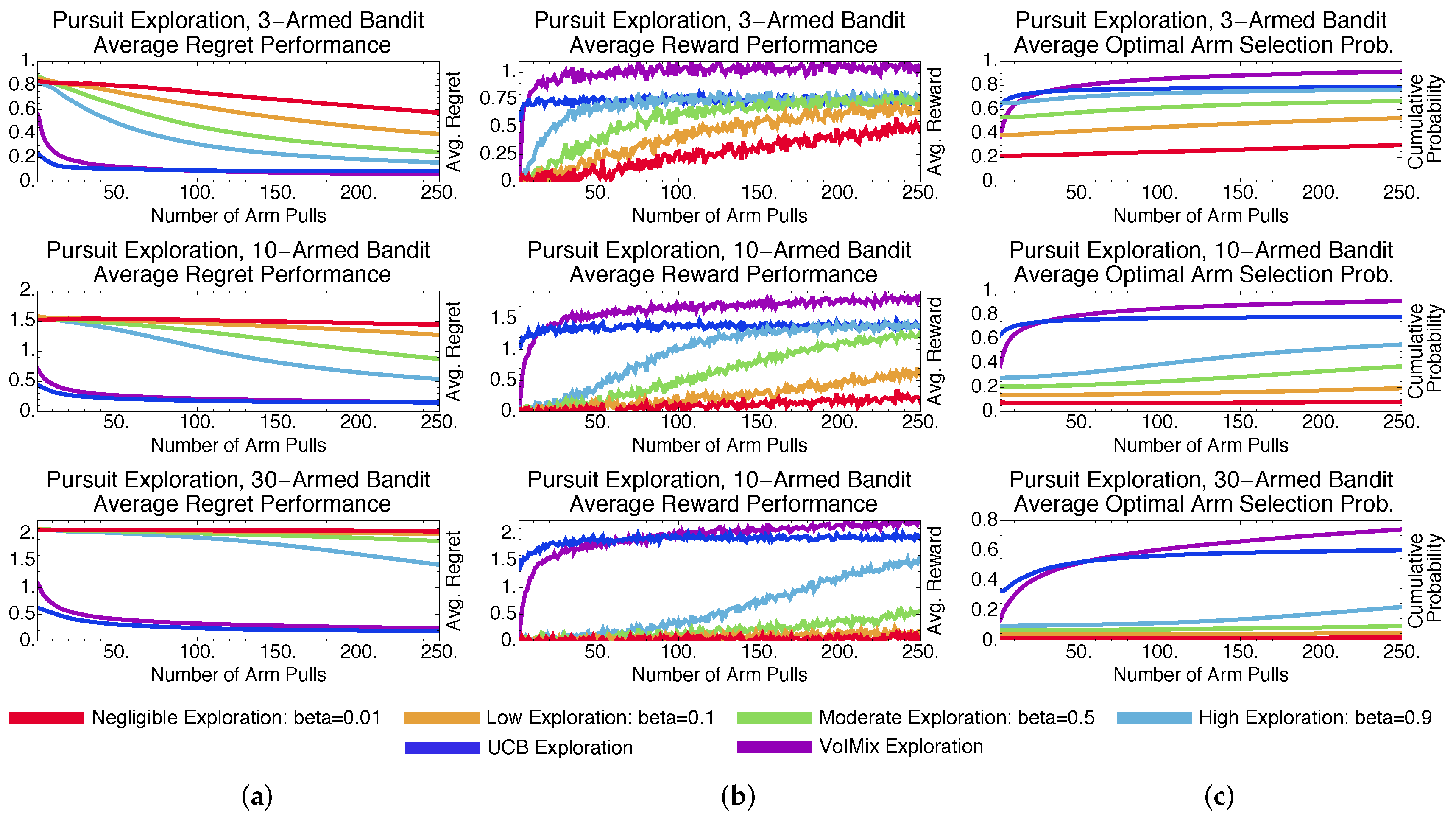

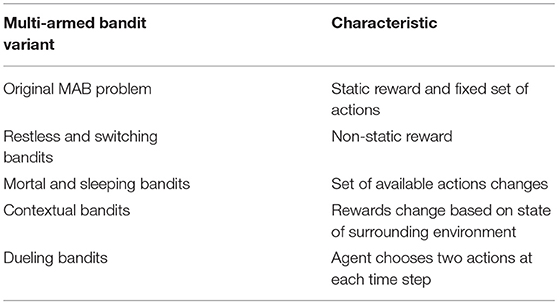

Entropy, Free Full-Text

How to build better contextual bandits machine learning models

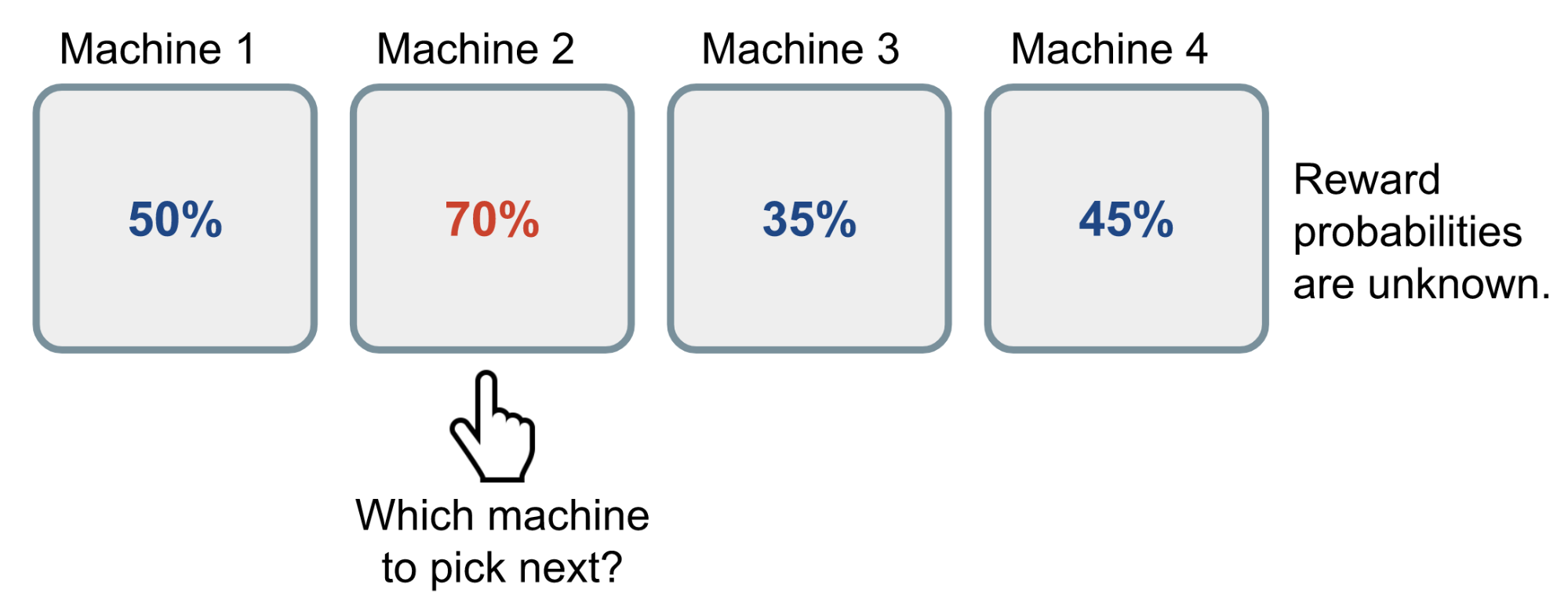

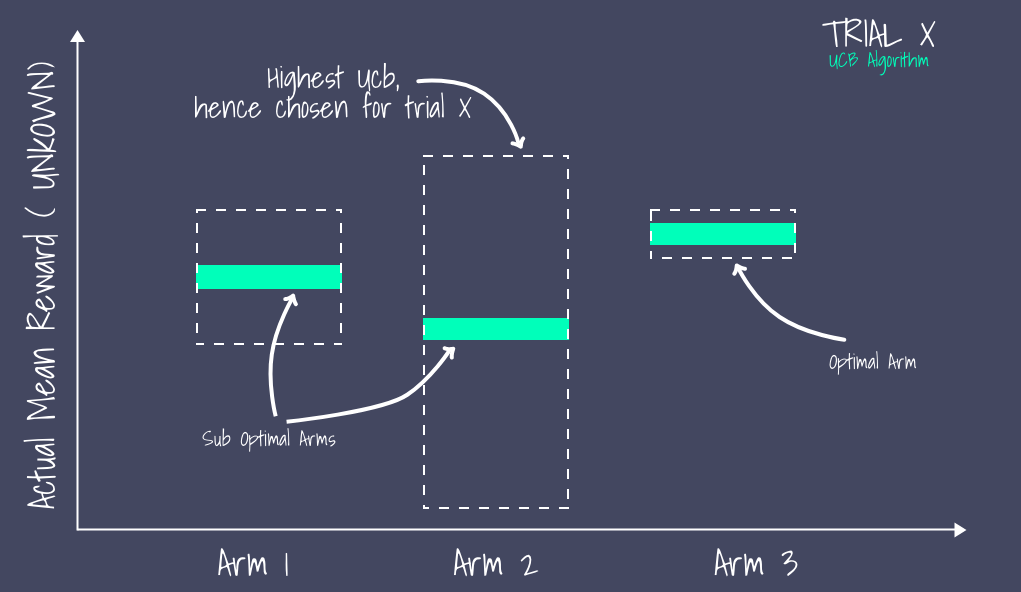

A multi-armed bandit problem - or, simply, a bandit problem - is a sequential allocation problem defined by a set of actions. At each time step, a

Regret Analysis of Stochastic and Nonstochastic Multi-Armed Bandit Problems (Foundations and Trends(r) in Machine Learning)

Some Reinforcement Learning: The Greedy and Explore-Exploit Algorithms for the Multi-Armed Bandit Framework in Python

The Multi-Armed Bandit Problem and Its Solutions

Frontiers Multi-Armed Bandits in Brain-Computer Interfaces

Multi-Armed Bandit Testing - The Data Incubator

Multi-Armed Bandit: Solution Methods, by Mohit Pilkhan

The Multi-Armed Bandit Problem-Explained

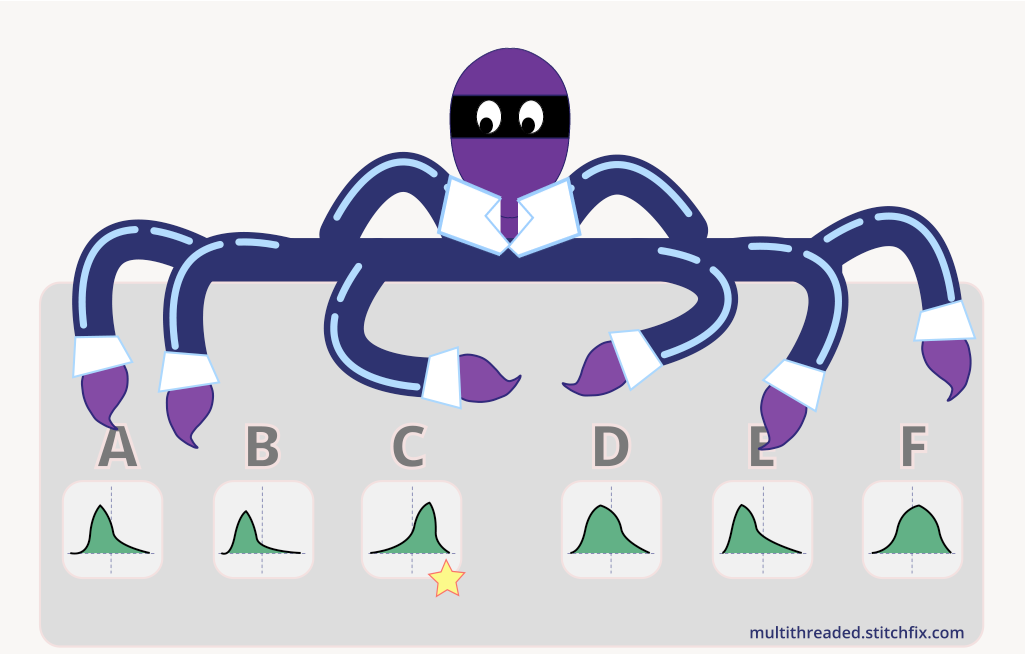

Multi-Armed Bandits and the Stitch Fix Experimentation Platform

Recommender systems using LinUCB: A contextual multi-armed bandit approach, by Yogesh Narang

Reinforcement Learning 2. Multi-armed Bandits

de

por adulto (o preço varia de acordo com o tamanho do grupo)